Sprung A Leak

This robot-as-art exhibit was completed as an additional project during my PostDoc. The resulting exhibition ran for 6 months at the Tate Liverpool (visited by 140,000 people) before going on tour to Haus der Kunst (Munich, Germany) M Museum (Leuven, Belgium) and Art Tower Mito (Mito, Japan).

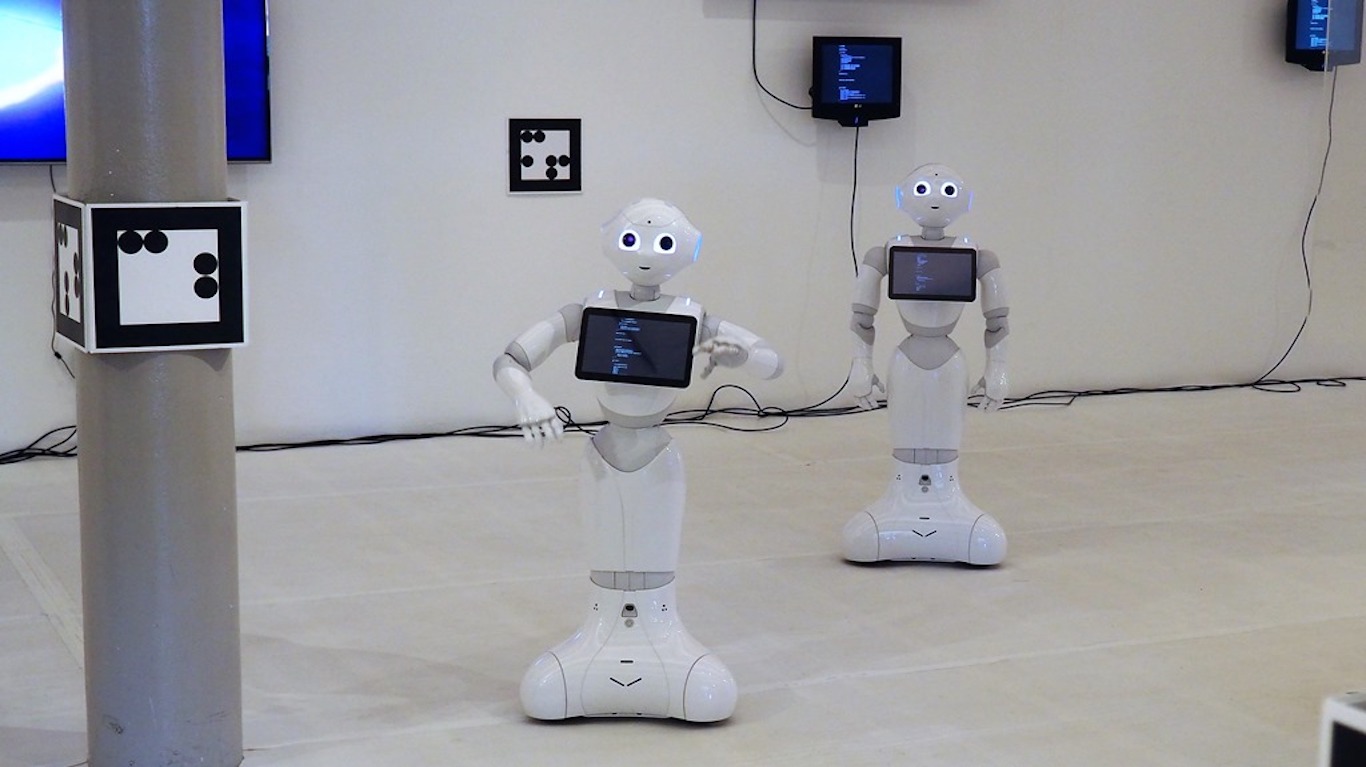

Working with a close friend (also studying Robotics) and artist Cecil B Evans, I developed a framework to allow two 'Pepper' robots to perform a play within a large-scale, permanent art exhibition where members of the public were encouraged to enter and freely explore the space.

This framework included a custom navigation system, as well as integration with existing Pepper API, allowing the robots to move freely around the space and perform physical gestures while avoiding collisions with the gallery space itself and the roaming public.

Many late nights in both Tate Liverpool and Beligum were had.